Unveiling Gemini 1.5 Flash: A Game-Changer in Long-Context AI

Gemini AI

Unveiling Gemini 1.5 Flash:

The landscape of artificial intelligence (AI) is constantly evolving, with models pushing the boundaries of capability and efficiency. Google DeepMind’s latest offering, Gemini 1.5 Flash, stands as a testament to this progress. This blog post dives deep into the groundbreaking features of Flash, exploring its exceptional long-context understanding, impressive performance gains, and potential to revolutionize various generative AI applications.

A Legacy of Advancement: The Gemini 1.5 Family

Gemini 1.5 Flash isn’t an isolated innovation; it’s the culmination of advancements within the Gemini 1.5 family. This series of models is renowned for its multifaceted capabilities, including:

- Efficiency: Gemini models are designed to be resource-conscious, requiring less computing power to train and operate compared to previous generations.

- Reasoning: These models excel at logical deduction, allowing them to tackle complex problems and draw informed conclusions.

- Planning: Gemini models can strategize and plan for future actions, making them valuable for tasks requiring foresight.

- Multilinguality: The ability to understand and process information across various languages makes them ideal for a globalized world.

- Function Calling: Gemini models can comprehend and execute specific functions within code, opening doors for advanced automation.

Flash: Pushing the Limits of Performance

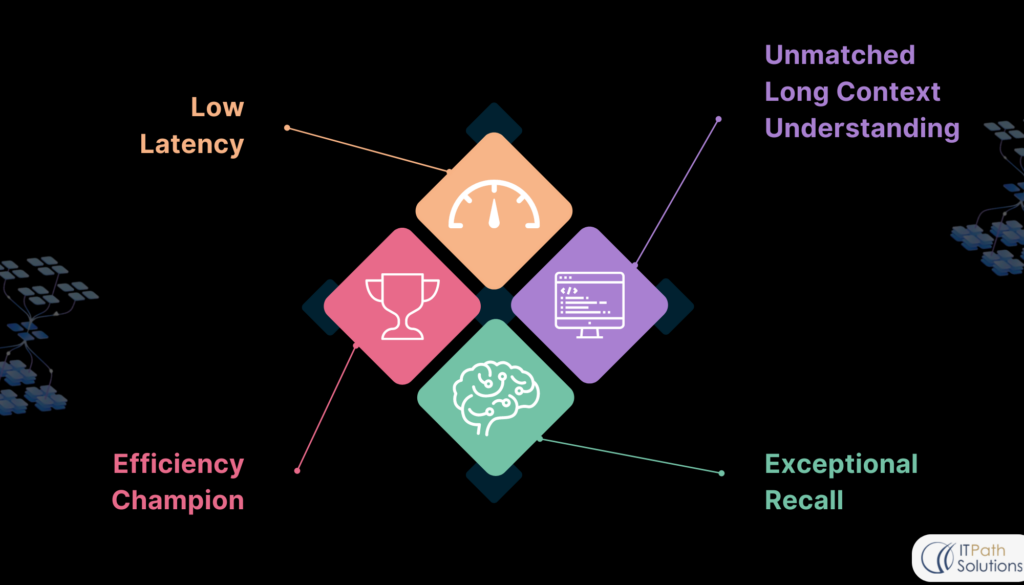

Building upon this solid foundation, Gemini 1.5 Flash takes AI performance to the next level. Here’s a closer look at its defining characteristics:

Fig-2 Solid foundations of Flash

- Unmatched Long Context Understanding: One of Flash’s most remarkable features is its ability to comprehend vast amounts of information. It can process up to 2 million tokens of text, video, or audio data. This allows it to grasp the nuances of long documents, intricate video narratives, and complex code structures – a significant leap compared to most current models struggling with limited context windows.

- Exceptional Recall: Imagine searching through a massive library and pinpointing the exact piece of information you need. Flash excels at this task, achieving near-perfect recall (over 99%) across all modalities. Whether searching for a specific detail within a lengthy legal document, identifying a crucial moment in a historical video, or locating a particular function call within a sprawling codebase, Flash delivers exceptional accuracy.

- Efficiency Champion: Despite its impressive capabilities, Flash prioritizes efficiency. It’s specifically designed to utilize resources optimally, leading to faster response times compared to competing models. This efficiency makes Flash ideal for real-time applications where speed and responsiveness are crucial.

- Low Latency: In addition to its impressive performance on long-context tasks, Flash is designed for efficiency. It prioritizes low latency, meaning it can generate responses quickly. This is achieved through techniques like parallel computation, allowing Flash to perform multiple calculations simultaneously and reducing response times. This low latency makes Flash ideal for real-time applications where immediate responses are critical.

Flash surpasses its predecessors on a wide range of benchmarks. The following table illustrates this point by comparing Flash’s win rate against Gemini 1.0 Pro and Ultra on various tasks. As you can see, Flash consistently outperforms the previous models.

| Gemini 1.5 Flash | Relative to 1.0 Pro | Relative to 1.0 Ultra |

| Long-Context Text,

Video & Audio |

from 32k up to 10M tokens | from 32k up to 10M tokens |

| Core Capabilities | Win-rate: 82.0%

(41/50 benchmarks) |

Win-rate: 46.7%

(21/44 benchmarks) |

| Text | Win-rate: 94.7%

(18/19 benchmarks) |

Win-rate: 42.1%

(8/19 benchmarks) |

| Vision | Win-rate: 90.5%

(19/21 benchmarks) |

Win-rate: 61.9%

(13/21 benchmarks) |

| Audio | Win-rate: 0%

(0/5 benchmarks) |

Win-rate: 0%

(0/5 benchmarks) |

Table-1 Comparison between Gemini 1.5 Pro, 1.0 Pro and 1.0 Ultra (Table courtesy: Google DeepMind)

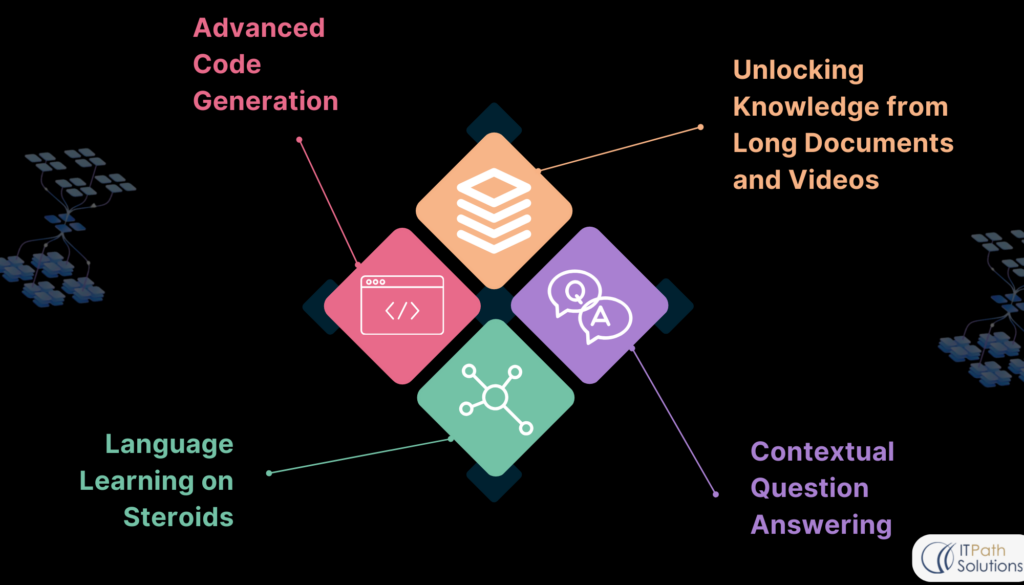

Real-World Applications: Where Flash Shines

The power of Gemini 1.5 Flash extends far beyond theoretical benchmarks. Here are some concrete examples of how Flash can revolutionize various fields:

Fig-3 Real world applications on Flash

- Unlocking Knowledge from Long Documents and Videos: Research scholars and analysts can leverage Flash’s long-context understanding to extract critical insights from lengthy documents, research papers, or historical video archives. Imagine a historian analyzing hours of footage to pinpoint a specific leader’s speech or a scientist sifting through extensive research papers to identify a crucial experiment – Flash can expedite these processes significantly.

- Contextual Question Answering: Traditional AI models often struggle with questions that require understanding the broader context. Flash, with its exceptional long-context processing abilities, can answer intricate questions based on vast amounts of information. This opens doors for applications like intelligent tutoring systems, legal research assistants, or medical diagnosis support tools.

- Language Learning on Steroids: Learning a new language traditionally requires dedicated study and immersion. Flash demonstrates the potential to revolutionize language acquisition by learning from limited data sets. Imagine learning a rare or endangered language with minimal resources – Flash paves the way for such possibilities.

- Advanced Code Generation: Programmers often spend significant time writing repetitive or boilerplate code. Flash’s ability to understand and generate complex code structures can significantly improve app developers productivity. Imagine Flash automatically generating code snippets based on natural language instructions or completing missing sections within a codebase – this has the potential to streamline the software development process.

Also Read:- Stop Guessing, Start Knowing: Business Intelligence Solutions For Smarter Decisions

A Technical Glimpse: Under the Hood of Flash

For those with a technical background, the blog delves deeper into the inner workings of Flash, exploring aspects like:

- Transformer Decoder Model Architecture: Flash utilizes a transformer decoder model architecture, which allows it to efficiently process sequential data while analyzing relationships between different elements.

- Online Distillation for Enhanced Efficiency: Flash leverages a technique called online distillation, where the knowledge and capabilities of a larger, pre-trained model are compressed into a smaller, more efficient model like Flash. This contributes significantly to Flash’s impressive performance without sacrificing resource utilization.

- Low Latency for Real-Time Applications: Flash is specifically designed for low latency, meaning it can generate responses quickly. This is achieved through techniques like parallel computation.

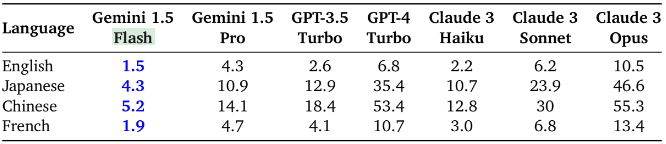

Fig-4 Latency comparison with various models (Image courtesy: Google DeepMind)

Time per output character (ms) of various APIs for English, Japanese, Chinese, and French responses, given inputs of 10,000 characters. Gemini 1.5 Flash achieves the fastest output generation for all languages tested. Across all four evaluated languages, Gemini 1.5 Flash yields the fastest output generation of all models, and Gemini 1.5 Pro shows faster generation than GPT-4 Turbo, Claude 3 Sonnet, and Claude-3 Opus (see Table 3). For English queries, Gemini 1.5 Flash generates over 650 characters per second, more than 30% faster than Claude 3 Haiku, the second fastest of the models evaluated.

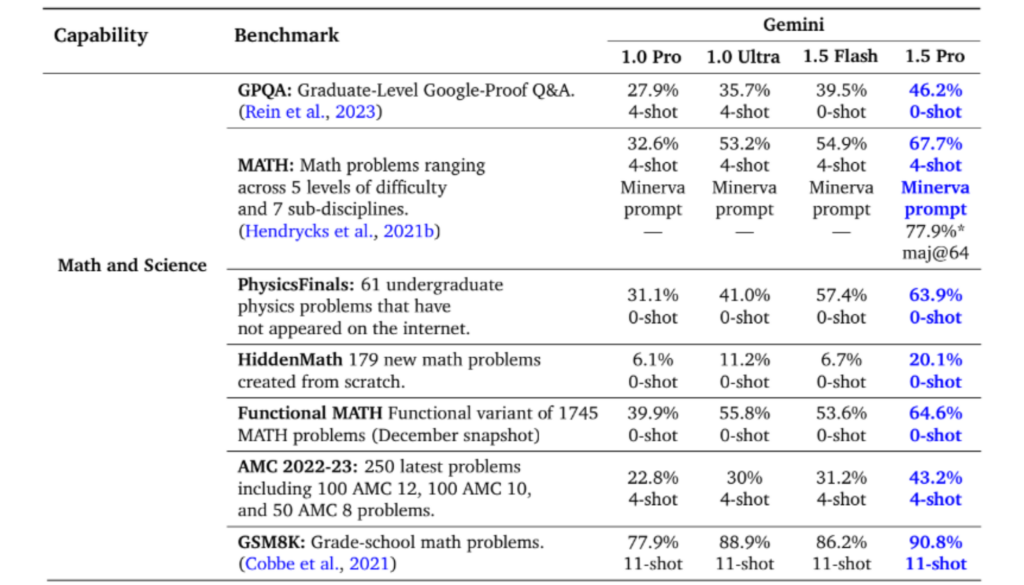

Core Text Evaluation: A Benchmark of Success

Flash undergoes a rigorous evaluation process to assess its proficiency in seven critical text-based capabilities. Detailed tables summarize these findings, highlighting Flash’s significant improvements over previous models.

| Core Capability | 1.5 Pro Relative to | 1.5 Flash Relative to | ||||

|---|---|---|---|---|---|---|

| 1.5 Pro (Feb) | 1.0 Pro | 1.0 Ultra | 1.0 Pro | 1.0 Ultra | ||

| Text | Math, Science & Reasoning | +5.9% | +49.6% | +18.1% | +30.8% | +4.1% |

| Multilinguality | -0.7% | +21.4% | +5.9% | +16.7% | +2.1% | |

| Coding | +11.6% | +21.5% | +11.7% | +10.3% | +1.5% | |

| Instruction following | — | +9.9% | -0.2% | +8.7% | -1.2% | |

| Function calling | — | +72.8% | — | +54.6% | — | |

| Vision | Multimodal reasoning | +15.5% | +31.5% | +14.8% | +15.6% | +1.0% |

| Charts & Documents | +8.8% | +63.9% | +39.6% | +35.9% | +17.9% | |

| Natural images | +8.3% | +21.7% | +8.1% | +18.9% | +5.6% | |

| Video understanding | –0.3% | +18.7% | +2.1% | +7.5% | -8.1% | |

| Audio | Speech recognition | +1.0% | +2.2% | -3.8% | -17.9% | -25.5% |

| Speech translation | -1.7% | -1.5% | -3.9% | -9.8% | -11.9% | |

Table-2 Improvement in core abilities compare to previous models (Table courtesy: Google DeepMind)

Here’s a glimpse into some key areas:

- Math and Science: Flash demonstrates exceptional progress in tackling complex mathematical and scientific problems. Compared to Gemini 1.0 Pro, it achieves a remarkable 49.6% improvement on the challenging Hendrycks MATH benchmark, showcasing its mastery of middle and high-school level mathematics. Additionally, Flash exhibits significant gains on physics and advanced math problems.

Fig-5 Improvement in Math & Science (Image courtesy: Google DeepMind)

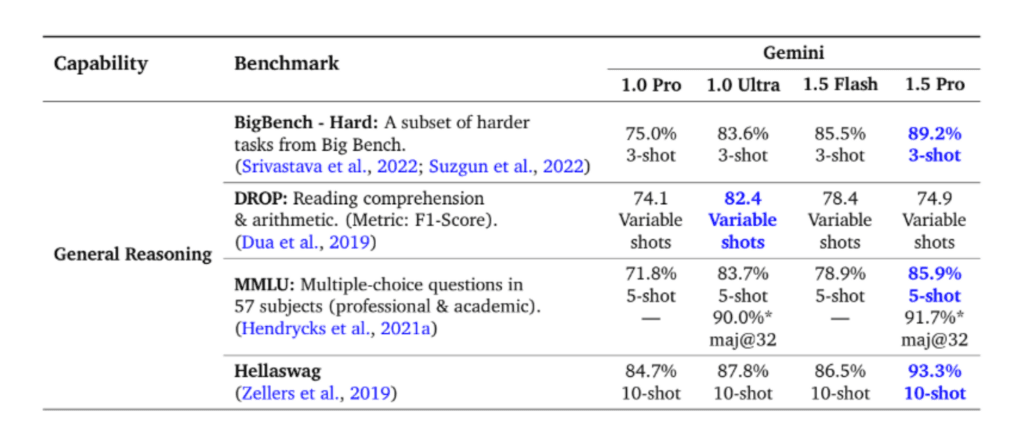

- General Reasoning: Flash excels at tasks requiring logical deduction, multi-step reasoning, and applying common sense. It outperforms Gemini 1.0 Pro on benchmarks like BigBench-Hard, a curated set of challenging tasks designed to assess complex reasoning abilities. This signifies Flash’s capability to navigate intricate situations and draw informed conclusions.

Fig-6 Improvement in General reasoning (Image courtesy: Google DeepMind)

Beyond Text: Flash’s Multimodal Prowess

While text comprehension remains a core strength, Flash extends its capabilities to other modalities like vision and audio. Here’s a look at its performance:

- Vision: Flash shows improvement in tasks involving multimodal reasoning (combining information from text and images) and interpreting charts, documents, and natural images. Notably, it achieves a 63.9% improvement over Gemini 1.0 Pro on the Charts & Documents benchmark, demonstrating significant progress in understanding visual data.

A Technical Glimpse: Under the Hood of Flash

Parallel computation allows Flash to perform multiple calculations simultaneously, reducing response times. This low latency makes Flash ideal for real-time applications where immediate responses are critical.

- Benchmarking Against the Competition: The blog also provides a comparative analysis of Flash’s performance against other leading models. This comparison highlights Flash’s superiority in terms of long-context understanding, efficiency, and response speed.

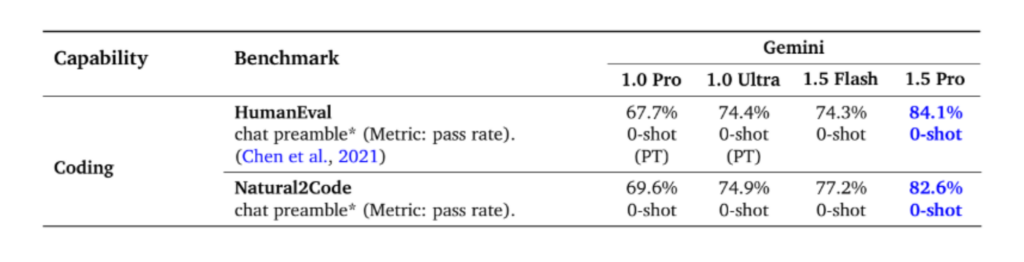

A Champion for Code Generation:

Flash establishes itself as the frontrunner in code generation within the Gemini family. It surpasses all previous models on the HumanEval benchmark and performs exceptionally well on Natural2Code, an internal test designed to prevent data leakage. This achievement indicates Flash’s potential to revolutionize code development by automating repetitive tasks and assisting programmers with code generation.

Fig-7 Improvement in Code generation

Looking Ahead: The Future of Long-Context AI

The potential of long-context AI extends far beyond the capabilities of Flash. As research progresses, we can expect even more advanced models capable of:

- Understanding even larger contexts: Imagine models that can process and reason across billions of tokens, enabling them to grasp complex narratives across historical data sets or analyze intricate scientific simulations.

- Reasoning across different modalities: Current models primarily focus on a single modality (text, video, audio). The future holds promise for models that can seamlessly combine information from various sources, leading to a more comprehensive understanding of the world.

- Personalization and User-Specific Context: AI models can become increasingly attuned to individual user preferences and context. Imagine a system that tailors its responses based on your past interactions and current needs.

The possibilities with long-context AI are vast and exciting. As models like Gemini 1.5 Flash continue to push the boundaries, we can expect a future where AI becomes an even more powerful tool for exploration, discovery, and progress.

Also Read: Building the Future of Healthcare: A Step-by-Step Guide to Telemedicine App Development

Conclusion: A New Dawn for AI

The arrival of Gemini 1.5 Flash marks a significant milestone in the evolution of AI. Its ability to process vast amounts of information unlocks a new level of understanding and interaction with the world around us. Flash’s efficiency and speed make it a practical tool for real-world applications, impacting various fields from research and education to software development and language learning. As AI continues to evolve, models like Flash pave the way for a future where intelligent systems seamlessly integrate into our lives, empowering us to achieve more and unlock new possibilities.

Healthcare

Healthcare  Education

Education  Real Estate

Real Estate  Logistic

Logistic  Fitness

Fitness  Tourism

Tourism  Travel

Travel  Banking

Banking  Media

Media  E-commerce

E-commerce  Themes

Themes

Plugins

Plugins

Patterns

Patterns